By Maia Powell, Arnold D. Kim, and Paul E. Smaldino

Individuals regularly broadcast information about their identities in public forums. In fact, social scientists recognize that an important function of public communication is to signal one’s membership in some categorizable subset of individuals [4, 9]. Social identity provides a lens that shapes and alters how humans perceive the world around them. In the contemporary U.S., political partisanship is one of the most salient identity categories [8]; as such, Americans on the political left or right appear to inhabit very different mental worlds. Furthermore, left-right political orientation seems to correlate with reliably different personality profiles and result in correspondingly different behavioral patterns [1]. Left and right partisans may therefore perceive identical stimuli in dramatically different lights [6, 11].

Social media platforms such as Twitter have become wide forums for identity signaling. A particularly interesting affordance for doing so is the hashtag, which can mark a tweet—and by extension the tweet’s author—as belonging or declaring allegiance to a particular identity group. Among the most widespread and influential sociopolitical hashtags in recent years is #BlackLivesMatter, which gained significance after the murders of Trayvon Martin and Michael Brown and subsequent lack of criminal convictions for their killers [2]. The hashtag later evolved to bring awareness to other acts of injustice against Black members of the population, primarily by police. In response, the hashtag #AllLivesMatter emerged to assert “colorblind” attitudes that are ostensibly at odds with #BlackLivesMatter sentiments [3, 5, 10]. Although neither hashtag is formally associated with a political party, they have become entangled in the increasingly polarized landscape of American political identity [3, 7].

To address this phenomenon, we investigated the way in which demographic variance moderates the perception of tweets that are tagged with the #BlackLivesMatter and #AllLivesMatter hashtags as being offensive or racist. To assess the influence of hashtags themselves, we removed hashtags from tweets in which they originally appeared and appended new hashtags to selected neutral tweets.

Twitter Stimuli and Qualtrics Survey

To gather data, we scraped tweets from 2020 that contained either the hashtag #AllLivesMatter or #BlackLivesMatter. We then filtered the tweets to ensure that they only contained one hashtag, did not mention other Twitter handles, and had no attachments. By further filtering the tweets manually, we ensured that the hashtag occurred at the end of each tweet and was not the subject of the message (e.g., “My least favorite hashtag is #BlackLivesMatter”). We also sampled neutral tweets from previous studies that used crowdsourcing to evaluate if the tweets were racist and/or sexist [12], only selecting tweets that appeared to be about politically neutral content.

Figure 1. Participant view of instructions (top) and one sample tweet evaluation (bottom) on Qualtrics. Figure courtesy of the authors.

In our survey setup, participants first consented to the study, completed a CAPTCHA verification, and received necessary instructions; they were then randomly assigned one of 10 possible datasets that each contained 30 tweets in a random order. The participants evaluated whether one could perceive the contents of each tweet as racist, offensive, both, or neither, and decided whether these perceptions applied to (i) themselves personally, (ii) individuals within their social network, and/or (iii) individuals outside of their social network. To document the effect of hashtags on perceptions, some participants reviewed tweets with hashtags and others examined tweets without hashtags. Individuals who analyzed datasets with hashtags saw the raw tweets wherein hashtags were already present, as well as neutral tweets with #AllLivesMatter or #BlackLivesMatter appended. Participants who were assigned the dataset without hashtags viewed the #AllLivesMatter and #BlackLivesMatter tweets with the hashtag omitted, as well as unaltered neutral tweets. Figure 1 provides the participant view of the study.

After completing the tweet evaluations, participants filled out a demographic survey that included questions about age, gender, familiarity with hashtags, news consumption, and religiosity. We also modified a questionnaire to determine political orientation on a scale from \(-10\) to \(+10\), where scores of \(-10\) and \(+10\) respectively indicate that a participant is maximally liberal or maximally conservative. We classified participants with scores of less than \(0\) as liberal and those with scores greater than \(0\) as conservative. We recruited 1,428 people through Amazon Mechanical Turk; after omissions based upon check questions, 1,244 viable participants remained. Our subsequent participant population was predominantly male and skewed heavily towards liberal and White individuals.

Results

To understand the relationship between demographics and corresponding evaluations, we first examined the frequency of racist and offensive ratings as a function of individuals’ demographic characteristics. Among all of the demographic factors that we assessed, political orientation was the strongest predictor of participant’s impression of tweets as racist or offensive. Individuals on the political left rated #AllLivesMatter tweets as more offensive and racist than #BlackLivesMatter tweets, whereas individuals on the political right rated #BlackLivesMatter tweets as more offensive and racist than #AllLivesMatter tweets (see Figure 2).

Figure 2. Relative frequencies of racist and offensive ratings for #AllLivesMatter and #BlackLivesMatter tweets for (2a) personal, (2b) within personal social network, and (2c) outside of personal social network evaluations, given as a function of political score (with \(-10\) being maximally liberal and \(10\) being maximally conservative). We calculate relative frequency by dividing racist or offensive counts by total counts. Relative frequencies and regressions exhibit 95 percent confidence. Figure courtesy of the authors.

When participants were asked to imagine how individuals within their personal social networks would respond to the tweets, the patterns of ratings were nearly identical to their own personal evaluations (see Figure 2b). This outcome suggests that they expect cohesion and agreement from those who are close to them. However, the association between political orientation and perceptions of tweets as racist or offensive did not hold when participants were asked to imagine how someone outside of their social network would react (see Figure 2c). Their responses suggest an understanding that their judgment of tweets as racist or offensive would not be shared by everyone.

The effect of hashtag presence was most prevalent among left-leaning participants, who often considered tweets with #AllLivesMatter as both racist and offensive. A similar, albeit weaker, effect occurred with right-leaning participants who marked tweets with #BlackLivesMatter as racist. Overall, the presence of a hashtag increased the likelihood that partisans in both cases would perceive tweet content as racist and/or offensive.

Figure 3. Results of feature importances for full random forest models with all eight predictors. Feature importances lie between \([0,1]\) and sum to \(1\); \(0\) indicates that a feature is not important at all, and \(1\) indicates that it is of utmost importance. 3a. Evaluations with hashtags. 3b. Evaluations without hashtags. Figure courtesy of the authors.

To verify that political orientation was the strongest predictor of tweet perception, we constructed random forest models to predict racist and offensive evaluations as a function of age, gender, race, four different religiosity variables, and political orientation. By evaluating feature importances, we found that the political orientation score is ranked substantially higher than all other predictors for each model (see Figure 3).

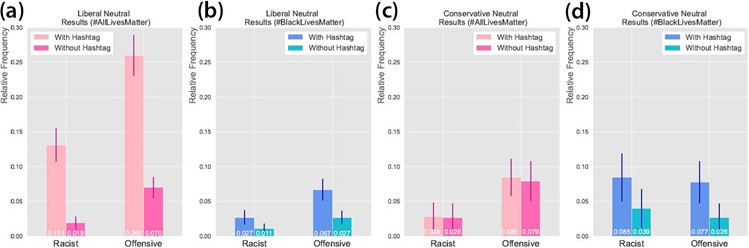

Unsurprisingly, participants were much less likely to rate neutral tweets as racist or offensive than #AllLivesMatter and #BlackLivesMatter tweets (see Figure 4). However, the artificial addition of hashtags to neutral tweets increased the likelihood that individuals would perceive the tweets as racist or offensive. In particular, the addition of #AllLivesMatter to neutral tweets was associated with a large increase in racist and/or offensive ratings among liberal participants, while the addition of #BlackLivesMatter to neutral tweets was associated with a moderate increase in racist and/or offensive ratings among both liberals and conservatives. Though we were surprised that #BlackLivesMatter increased perceptions of neutral tweets as racist and/or offensive among liberal participants, we thought such a response might be provoked by the juxtaposition of a serious matter (the hashtag) in a banal context.

Figure 4. Evaluations of neutral tweets by political score with a 90 percent confidence interval. We calculate relative frequency by dividing racist and offensive counts by total counts. 4a–4b. Independent t-tests revealed statistically significant differences between liberal participant evaluations of neutral tweets with appended hashtags versus without. The addition of #AllLivesMatter had a more significant effect (corresponding to p-values of \(2.359 \times 10^{-13}\) and \(1.746 \times 10^{-21}\) for racist and offensive evaluations, respectively) than the addition of #BlackLivesMatter (corresponding to p-values of \(0.027\) and \(0.0002\) for racist and offensive evaluations, respectively). 4c–4d. Differences between conservative participant evaluations of neutral tweets with appended hashtags versus without were much less significant. The addition of #AllLivesMatter had the weakest effect (corresponding to p-values of \(0.915\) and \(0.812\) for racist and offensive evaluations, respectively), followed by the addition of #BlackLivesMatter (corresponding to p-values of \(0.102\) and \(0.028\) for racist and offensive evaluations, respectively). Figure courtesy of the authors.

Conclusions

In the U.S. and elsewhere—particularly in diverse nations—political identity is increasingly the dominant factor that drives much social behavior [8]. In our study, we demonstrated that perceptions of the race-relevant hashtags #BlackLivesMatter and #AllLivesMatter among U.S. participants diverge considerably in ways that are dictated by political orientation. Participants on the political right were more likely to rate tweets with #BlackLivesMatter as offensive and racist, while participants on the political left were more like to rate tweets with #AllLivesMatter as offensive and racist. Political orientation was more strongly predictive of these divergent responses than demographic factors such as age, gender, religiosity, or race.

Our study illuminates a present-day association between identities, viewpoints, and signals that can inform our understanding of politically relevant communication both online and off. More generally, it helps demonstrate the extent to which identity—including political identity within an allegedly integrated society—can dramatically shape the processing and interpretation of information. This realization can have important societal ramifications, as rational conversations about important concepts require firm grounding in the use of particular terms. Disagreements about the meaning of “Black Lives Matter” and “All Lives Matter”—as well as whether content is or is not racist and/or offensive—is likely to hinder society’s ability to reach consensus or even compromise on these and related issues.

Maia Powell presented this research during a minisymposium presentation at the 2022 SIAM Annual Meeting, which took place in Pittsburgh, Pa., last summer.

Acknowledgments: Arnold Kim acknowledges support from the National Science Foundation (NSF) (DMS-1840265), and Maia Powell acknowledges support from the NSF Graduate Research Fellowship Program (NSF-1744620, NSF-2139297). The authors also thank Emilio Lobato for help with survey implementation and Harish Bhat for statistical assistance (both of the University of California, Merced).

References

[1] Carney, D.R., Jost, J.T., Gosling, S.D., & Potter, J. (2008). The secret lives of liberals and conservatives: Personality profiles, interaction styles, and the things they leave behind. Polit. Psychol., 29(6), 807-840.

[2] Francis, M.M., & Wright-Rigueur, L. (2021). Black Lives Matter in historical perspective. Annu. Rev. Law Soc. Sci., 17(1),441-458.

[3] Gallagher, R.J., Reagan, A.J., Danforth, C.M., & Dodds, P.S. (2018). Divergent discourse between protests and counter-protests: #BlackLivesMatter and #AllLivesMatter. PLOS ONE, 13(4), e0195644.

[4] Goffman, E. (1959). The presentation of self in everyday life. New York, NY: Anchor Books.

[5] Ince, J., Rojas, F., & Davis, C.A. (2017). The social media response to Black Lives Matter: How Twitter users interact with Black Lives Matter through hashtag use. Ethn. Racial Stud., 40(11), 1814-1830.

[6] Kahan, D.M., Hoffman, D.A., Braman, D., & Evans, E. (2012). “They saw a protest”: Cognitive illiberalism and the speech-conduct distinction. Stanford Law Rev., 64, 851-905.

[7] Kim, S. & Lee, A. (2022). Black Lives Matter and its counter-movements on Facebook. Preprint, Social Science Research Network.

[8] Mason, L. (2018). Uncivil agreement: How politics became our identity. Chicago, IL: University of Chicago Press.

[9] Smaldino, P.E. (2019). Social identity and cooperation in cultural evolution. Behav. Processes, 161, 108-116.

[10] Tawa, J., Ma, R., & Katsumoto, S. “All lives matter”: The cost of colorblind racial attitudes in diverse social networks. Race Soc. Prob., 8(2), 196-208.

[11] Van Bavel, J.J., & Pereira, A. (2018). The partisan brain: An identity-based model of political belief. Trends Cog. Sci., 22(3), 213-224.

[12] Waseem, Z., & Hovy, D. (2016). Hateful symbols or hateful people? Predictive features for hate speech detection on Twitter. In Proceedings of the NAACL student research workshop (pp. 88-93). San Diego, CA: Association for Computational Linguistics.

|

Maia Powell (she/her/hers) is a Ph.D. candidate in the Applied Mathematics Department at the University of California, Merced. She received a National Science Foundation Graduate Research Fellowship in 2020 and is an active member of multiple graduate clubs at UC Merced, including the Graduate Student Association and RadioBio podcast. Her research examines the nature of disagreement on social media, structural differences between different types of speech, and spread of misinformation online. |

|

Arnold D. Kim is a founding member of the Department of Applied Mathematics at the University of California, Merced. His research focuses on wave propagation and scattering with applications in imaging, spectroscopy, remote sensing, photonics, and plasmonics. He also studies data science with applications to social justice and gamification. |

| |

Paul E. Smaldino is an associate professor of cognitive and information sciences and faculty in the Quantitative and Systems Biology graduate program at the University of California, Merced. He is also an external professor at the Santa Fe Institute and a research affiliate at Stanford University’s Center for Advanced Study in the Behavioral Sciences. Much of his work involves building and analyzing mathematical models and computer simulations to study how behaviors emerge and evolve in response to social, cultural, and ecological pressures, as well as how those pressures can themselves evolve. |